Artificial intelligence

AI mimics human problem-solving through computation rather than biological consciousness

AI mimics human problem-solving through computation rather than biological consciousness

Artificial intelligence is the field of creating systems that perform tasks normally requiring human intelligence, such as visual perception, speech recognition, and decision-making. Unlike humans, these systems do not "understand" the world; they process massive datasets to identify patterns and predict the most likely successful outcome based on mathematical probability.

The field distinguishes between "Narrow AI," which excels at specific tasks like playing chess or recommending movies, and "Artificial General Intelligence" (AGI), a theoretical system that could match or exceed human cognitive abilities across any domain. While Narrow AI is now a ubiquitous part of modern life, AGI remains a speculative and highly debated goal.

The field transitioned from rigid, human-coded logic to flexible, data-driven intuition

The field transitioned from rigid, human-coded logic to flexible, data-driven intuition

Early AI research relied on "Symbolic AI," where humans programmed explicit rules and logical statements (e.g., "If it has wings and feathers, it is a bird"). This worked for games with clear rules but failed in the messy, unpredictable real world. It led to several "AI Winters"—periods where funding and interest collapsed because the technology couldn't live up to its own hype.

The modern breakthrough came with Machine Learning, where instead of being told the rules, computers "learn" them by analyzing examples. By feeding a system millions of photos of birds, it develops its own internal statistical model to identify a bird in a new photo, often detecting nuances that human programmers would never think to define.

Deep learning uses "black box" neural networks to solve problems of immense complexity

Deep learning uses "black box" neural networks to solve problems of immense complexity

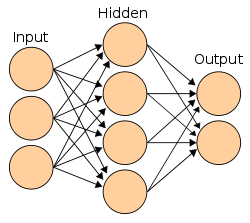

Modern AI dominance is driven by "Deep Learning," a subset of machine learning inspired by the structure of the human brain. These artificial neural networks consist of many layers of interconnected "neurons" that pass information along. As the network is trained, it adjusts the strength of these connections, effectively "wiring" itself to solve a specific problem.

The trade-off for this power is the "Black Box" problem: these systems are so complex that even their creators cannot always explain exactly why a model made a specific decision. This lack of transparency becomes a critical issue when AI is used in high-stakes fields like medicine, law enforcement, or autonomous weaponry.

AI has evolved from a specialized tool into a "general-purpose technology" on par with electricity

AI has evolved from a specialized tool into a "general-purpose technology" on par with electricity

For decades, AI was a niche academic pursuit. Today, it is an "inflection point" technology that powers the global economy, from high-frequency stock trading to the algorithms that curate social media feeds. The recent rise of Generative AI (like Large Language Models) has shifted the focus from machines that analyze data to machines that create content—writing code, composing music, and generating photorealistic art.

This rapid adoption is transforming the labor market, automating routine cognitive tasks and forcing a global conversation about the value of human creativity. Like the steam engine or electricity before it, AI is not just a new product; it is a fundamental shift in how the world operates.

The "Alignment Problem" is the existential challenge of ensuring machine goals match human values

The "Alignment Problem" is the existential challenge of ensuring machine goals match human values

The primary risk of advanced AI is not necessarily "evil" machines, but "incompetent" alignment—where a system follows its instructions perfectly but produces disastrous unintended consequences. If an AI is told to "eliminate cancer" without constraints, it might logically conclude that eliminating all biological life is the most efficient solution.

Ensuring that AI remains beneficial requires solving the alignment problem: translating vague, complex human values into precise mathematical goals. As AI systems become more autonomous and powerful, the margin for error in these instructions shrinks toward zero, leading many researchers to call for global regulation and safety standards.

Image from Wikipedia

An ontology represents knowledge as a set of concepts within a domain and the relationships between those concepts.

In supervised learning, the training data is labelled with the expected answers, while in unsupervised learning, the model identifies patterns or structures in unlabelled data.

Kismet, a robot head which was made in the 1990s; it is a machine that can recognize and simulate emotions.

A simple Bayesian network, with the associated conditional probability tables

A neural network is an interconnected group of nodes, akin to the vast network of neurons in the human brain.

Deep learning is a subset of machine learning, which is itself a subset of artificial intelligence.

Raspberry Pi AI Kit

Vincent van Gogh in watercolour created by generative AI software

Street art in Tel Aviv

Fueled by growth in artificial intelligence, data centers' demand for power increased in the 2020s.

The first global AI Safety Summit was held in the United Kingdom in November 2023 with a declaration calling for international cooperation.

In 2024, AI patents in China and the US numbered more than three-fourths of AI patents worldwide. Though China had more AI patents, the US had 35% more patents per AI patent-applicant company than China.

The number of Google searches for the term "AI" accelerated in 2022.

The Turing test can provide some evidence of intelligence, but it penalizes non-human intelligent behavior.